docker-flannel

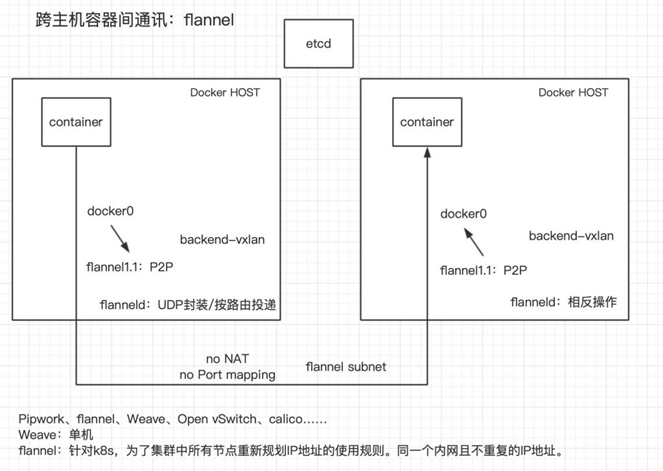

flannel

- flannel 为每个主机分配一个(subnet)子网,容器从这个子网中分配ip ,这些ip可以在主机之间进行路由

- 每个容器之间不需要nat和端口映射,就能完成跨主机通讯

- 通过计算机的最长匹配原则来进行的

flannel数据转发

- 数据会通过 backend来从etcd进行转发

- 数据包如何在主机间转发是由 backend 实现的。flannel 提供了多种 backend,最常用的有 vxlan 和 host-gw

- 其他 backend参数 请参考 https://github.com/coreos/flannel

flannel转发原理

创建好flannel网络时,用户指定一个大的网段通过上传到etcd,etcd通过backend来转发给加入etcd集群的主机,flannel收到后,会根据这个大的网段创建容器,将docker0修改为大网段的子网段,还会创建一个flannel.1网卡,用来进行udp封装(选择udp作为转发协议,是因为udp能穿透防火墙)

vxlan模式下,容器发送数据到别的主机的容器时,会经过docker0然后转发到flannel1网卡,通过p2p的udp封装,按路由(最长匹配原则),找到与之最接近的路由,转发到那一台主机上,到另一台主机上进行相反操作(udp解封装,传给docker0,到容器)

host-gw模式下,容器会通过etcd的信息数据创建到对端主机的路由条目,容器发送数据,会经过docker0,也就是docker0作为子网的网关,然后,通过本机路由表找到到对端主机的路由,直接走路由通信,不会经过flannel进行udp封装

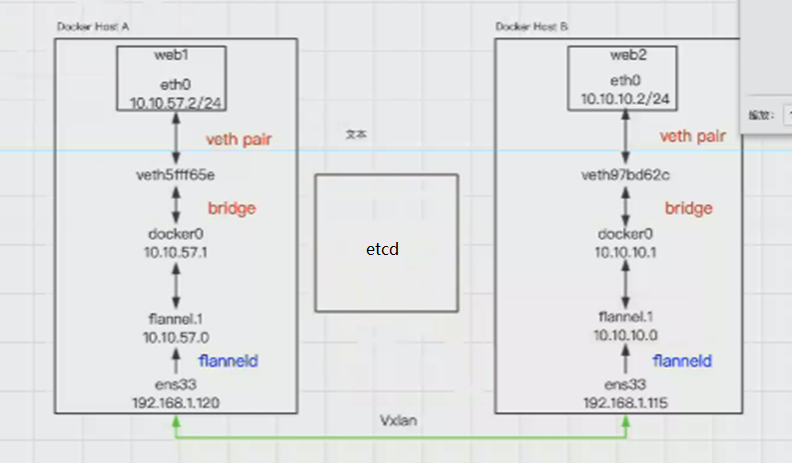

根据上图演示

web1发送消息到web2会先通过经过docker0,如果主机的vxlan模式会走flannel.1经过最长匹配原则,匹配最接近web2的ip地址段,通过upd封装发送到该地址段,对端主机经过flannel解包,然后通过docker0传给web2

web1发送消息到web2会先通过经过docker0 如果主机是host_gw模式,会走物理主机的路由条目,找到最匹配的物理机的路由条目,然后发送信息到该网段,对端主机操作相反,收到信息

配置flannel

| 192.168.100.211 | docker etcd flanneld |

|---|---|

| 192.168.100.212 | docker etcd flanneld |

准备环境

- 使用flannel关闭防火墙,selinux 开启路由转发

- 如果不关防火墙,放行2380/2379/4001三个端口

[root@localhost ~]# systemctl disable firewalld Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service. Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service. [root@localhost ~]# systemctl stop firewalld [root@localhost ~]# vim /etc/selinux/config SELINUX=disabled [root@localhost ~]# setenforce 0 [root@localhost ~]# getenforce Permissive清除防火墙策略

[root@localhost ~]# iptables -P FORWARD ACCEPT安装 flannel 和 etcd

[root@localhost ~]# yum -y install flannel etcd配置etcd

- 192.168.100.211

- 修改etcd配置文件,可以让etcd来识别两台docker主机,也可以说是集群,为他们去分配ip子网

[root@localhost ~]# cp -p /etc/etcd/etcd.conf /etc/etcd/etcd.conf.bak [root@localhost ~]# vim /etc/etcd/etcd.conf # etcd存放数据目录,为了方便改成了和etcd_name一样的名字 ETCD_DATA_DIR="/var/lib/etcd/etcd1" # 用于与其他节点通信 ETCD_LISTEN_PEER_URLS="http://192.168.100.211:2380" # 客户端会连接到这里和 etcd 交互 ETCD_LISTEN_CLIENT_URLS="http://192.168.100.211:2379,http://127.0.0.1:2379" # 节点名称,每台主机都不一样 ETCD_NAME="etcd1" 该节点同伴监听地址,这个值会告诉集群中其他节点 ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.100.211:2380" # 对外公告的该节点客户端监听地址,这个值会告诉集群中其他节点 ETCD_ADVERTISE_CLIENT_URLS="http://192.168.100.211:2379" # 集群中的主机信息 ETCD_INITIAL_CLUSTER="etcd1=http://192.168.100.211:2380,etcd2=http://192.168.100.212:2380" # 集群token,建议修改一个,同一个集群中的token一致 ETCD_INITIAL_CLUSTER_TOKEN="etcd-test" # 新建集群的时候,这个值为new假如已经存在的集群,这个值为 existing ETCD_INITIAL_CLUSTER_STATE="new" - 192.168.100.212

[root@localhost ~]# cp -p /etc/etcd/etcd.conf /etc/etcd/etcd.conf.bak [root@localhost ~]# vim /etc/etcd/etcd.conf # etcd存放数据目录,为了方便改成了和etcd_name一样的名字 ETCD_DATA_DIR="/var/lib/etcd/etcd2" # 用于与其他节点通信 ETCD_LISTEN_PEER_URLS="http://192.168.100.212:2380" # 客户端会连接到这里和 etcd 交互 ETCD_LISTEN_CLIENT_URLS="http://192.168.100.212:2379,http://127.0.0.1:2379" # 节点名称,每台主机都不一样 ETCD_NAME="etcd2" 该节点同伴监听地址,这个值会告诉集群中其他节点 ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.100.212:2380" # 对外公告的该节点客户端监听地址,这个值会告诉集群中其他节点 ETCD_ADVERTISE_CLIENT_URLS="http://192.168.100.212:2379" # 集群中的主机信息 ETCD_INITIAL_CLUSTER="etcd1=http://192.168.100.211:2380,etcd2=http://192.168.100.212:2380" # 集群token,建议修改一个,同一个集群中的token一致 ETCD_INITIAL_CLUSTER_TOKEN="etcd-test" # 新建集群的时候,这个值为new假如已经存在的集群,这个值为 existing ETCD_INITIAL_CLUSTER_STATE="new"etcd识别配置文件变量

- 默认刚才修改的内容,都是etcd启动后不会识别的,所以需要在启动文件中添加

# 两台主机都修改 [root@localhost ~]# vim /usr/lib/systemd/system/etcd.service # 在第十三行(ExecStart开头)末尾的前一个引号里面,添加如下 # 添加时不换行,空格隔开即可 --listen-peer-urls=\"${ETCD_LISTEN_PEER_URLS}\" --advertise-client-urls=\"${ETCD_ADVERTISE_CLIENT_URLS}\" --initial-cluster-token=\"${ETCD_INITIAL_CLUSTER_TOKEN}\" --initial-cluster=\"${ETCD_INITIAL_CLUSTER}\" --initial-cluster-state=\"${ETCD_INITIAL_CLUSTER_STATE}\" # ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/bin/etcd --name=\"${ETCD_NAME}\" --data-dir=\"${ETCD_DATA_DIR}\" --listen-client-urls=\"${ETCD_LISTEN_CLIENT_URLS}\" --listen-peer-urls=\"${ETCD_LISTEN_PEER_URLS}\" --advertise-client-urls=\"${ETCD_ADVERTISE_CLIENT_URLS}\" --initial-cluster=\"${ETCD_INITIAL_CLUSTER}\" --initial-cluster-token=\"${ETCD_INITIAL_CLUSTER_TOKEN}\" --initial-cluster-state=\"${ETCD_INITIAL_CLUSTER_STATE}\""启动etcd (两台)

[root@localhost ~]# systemctl daemon-reload [root@localhost ~]# systemctl start etcd查看健康状态

[root@localhost ~]# etcdctl cluster-health member 146f6898c619f119 is healthy: got healthy result from http://192.168.100.211:2379 member 3f00837914f85672 is healthy: got healthy result from http://192.168.100.212:2379 cluster is healthy # 查看集群中的 leader是那台 [root@localhost ~]# etcdctl member list 146f6898c619f119: name=etcd1 peerURLs=http://192.168.100.211:2380 clientURLs=http://192.168.100.211:2379 isLeader=true 3f00837914f85672: name=etcd2 peerURLs=http://192.168.100.212:2380 clientURLs=http://192.168.100.212:2379 isLeader=false # isLeader=true 100.212就是集群中的领导者,ip会从server1的flannel去分配

flannel vxlan类型容器互通

此类型的backend是通过UDP封装来实现的。

让flannel的etcd使用该文件中,定义的backend类型和给定的大网段范围10.10.0.0/16,然后使用24的子网掩码,在该范围中为容器自动划分ip

[root@localhost ~]# vim /root/etcd.json { "NetWork":"10.10.0.0/16", "SubnetLen":24, "Backend":{ "Type":"vxlan" } }将该文件导入etcd集群中

/usr/local/bin/network/config:该路径物理机并没有,是flannel的etcd内的

[root@localhost ~]# etcdctl --endpoints=http://192.168.100.211:2379 set /usr/local/bin/network/config < /root/etcd.json { "NetWork":"10.10.0.0/16", "SubnetLen":24, "Backend":{ "Type":"vxlan" } } # etcd2 查看是否可以读取到 etcd集群中的 值 [root@localhost ~]# etcdctl get /usr/local/bin/network/config { "NetWork":"10.10.0.0/16", "SubnetLen":24, "Backend":{ "Type":"vxlan" } }更改flannel配置文件

使得flannel可以识别这个文件

# 192.168.100.211 [root@localhost ~]# vim /etc/sysconfig/flanneld FLANNEL_ETCD_ENDPOINTS="http://192.168.100.211:2379" FLANNEL_ETCD_PREFIX="/usr/local/bin/network" # 192.168.100.212 [root@localhost ~]# vim /etc/sysconfig/flanneld FLANNEL_ETCD_ENDPOINTS="http://192.168.100.212:2379" FLANNEL_ETCD_PREFIX="/usr/local/bin/network" # 两台主机启动服务 [root@localhost ~]# systemctl enable flanneld [root@localhost ~]# systemctl start flanneld查看flannel分配的ip

查看启动flanneld后,随机在10.10.0.0/16地址池中分配的子网ip

[root@localhost ~]# ip a # 192.168.100.211 6: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether 0e:bc:ce:0b:12:1f brd ff:ff:ff:ff:ff:ff inet 10.10.85.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::cbc:ceff:fe0b:121f/64 scope link valid_lft forever preferred_lft forever # 192.168.100.212 6: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether fe:78:71:7b:17:ee brd ff:ff:ff:ff:ff:ff inet 10.10.88.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::fc78:71ff:fe7b:17ee/64 scope link valid_lft forever preferred_lft forever

docker0 连接 flannel.1

- 查看子网的MTU值与flannel子网网关

# 192.168.100.211 [root@localhost ~]# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.10.0.0/16 FLANNEL_SUBNET=10.10.85.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=false # 192.168.100.212 [root@localhost ~]# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.10.0.0/16 FLANNEL_SUBNET=10.10.88.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=false修改docker启动项

# 192.168.100.211 [root@localhost ~]# vim /usr/lib/systemd/system/docker.service # 第十四行 ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --bip=10.10.85.1/24 --mtu=1450 # 192.168.100.212 [root@localhost ~]# vim /usr/lib/systemd/system/docker.service # 第十四行 ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --bip= 10.10.88.1/24 --mtu=1450 # 重启docker [root@localhost ~]# systemctl daemon-reload [root@localhost ~]# systemctl restart docker查看docker0 是否变为 10网段的了

[root@localhost ~]# ip a # 192.168.100.211 5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:d0:82:52:93 brd ff:ff:ff:ff:ff:ff inet 10.10.85.1/24 brd 10.10.85.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:d0ff:fe82:5293/64 scope link valid_lft forever preferred_lft forever 6: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether 0e:bc:ce:0b:12:1f brd ff:ff:ff:ff:ff:ff inet 10.10.85.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::cbc:ceff:fe0b:121f/64 scope link valid_lft forever preferred_lft forever # 192.168.100.212 5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:db:e3:fc:2a brd ff:ff:ff:ff:ff:ff inet 10.10.88.1/24 brd 10.10.88.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:dbff:fee3:fc2a/64 scope link valid_lft forever preferred_lft forever 6: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether fe:78:71:7b:17:ee brd ff:ff:ff:ff:ff:ff inet 10.10.88.0/32 scope global flannel.1 valid_lft forever preferred_lft forever inet6 fe80::fc78:71ff:fe7b:17ee/64 scope link valid_lft forever preferred_lft forever集群中的路由条目会多出一个 10.10.0.0/16

# 查看路由条目,多出了子网的路由条目,可以由docker0来转发 [root@localhost ~]# ip r default via 192.168.1.3 dev ens33 proto static metric 100 10.10.0.0/16 dev flannel.1 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 192.168.1.0/24 dev ens33 proto kernel scope link src 192.168.100.211 metric 10

开启容器,两台主机的容器可以互相连通,flannel没有隔离性

# 192.168.100.211

[root@localhost ~]# docker run -itd --name bbox1 busybox

546d5f6c62a84a4ff8e5fa670b926f7155dd1f6426f31495370cb949b4ad2bfb

d[root@localhost ~]# docker exec -it bbox1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

9: eth0@if10: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:0a:55:02 brd ff:ff:ff:ff:ff:ff

inet 10.10.85.2/24 brd 10.10.85.255 scope global eth0

valid_lft forever preferred_lft forever

# 192.168.100.212

[root@localhost ~]# docker run -itd --name bbox2 busybox

99a6f6729ceda4bdd7483c4ad0cb831bbf24d715a7ce4af6fdf8bcd037d188c6

[root@localhost ~]# docker exec -it bbox2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

9: eth0@if10: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:0a:58:02 brd ff:ff:ff:ff:ff:ff

inet 10.10.88.2/24 brd 10.10.88.255 scope global eth0

valid_lft forever preferred_lft forever

# bbox1 分配了 10.10.85.0/24网段

# bbox2 分配了 10.10.88.0/24网段

- 连通性测试

[root@localhost ~]# docker exec -it bbox1 sh / # ping 10.10.88.2 PING 10.10.88.2 (10.10.88.2): 56 data bytes 64 bytes from 10.10.88.2: seq=0 ttl=62 time=7.319 ms 64 bytes from 10.10.88.2: seq=1 ttl=62 time=0.344 ms

host-gw模式互通

该模式是通过路由转发的,找的是路由条目。不通过flannel.1封装udp转发

只需要基于以上实验,修改导入etcd的IP地址池那个文件中的类型即可

[root@localhost ~]# vim etcd.json { "NetWork":"10.10.0.0/16", "SubnetLen":24, "Backend":{ "Type":"host-gw" } } # 重新导入到etcd中 [root@localhost ~]# etcdctl --endpoints=http://192.168.100.211:2379 set /usr/local/bin/network/config < /root/etcd.json { "NetWork":"10.10.0.0/16", "SubnetLen":24, "Backend":{ "Type":"host-gw" } }另一台主机查看是否导入

[root@localhost ~]# etcdctl get /usr/local/bin/network/config { "NetWork":"10.10.0.0/16", "SubnetLen":24, "Backend":{ "Type":"host-gw" } }重启flanneld 查看mtu变成了1500

# 192.168.100.211 [root@localhost ~]# systemctl restart flanneld [root@localhost ~]# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.10.0.0/16 FLANNEL_SUBNET=10.10.85.1/24 FLANNEL_MTU=1500 FLANNEL_IPMASQ=false # 查看路由条目 [root@localhost ~]# ip r default via 192.168.100.2 dev ens33 proto static metric 100 10.10.0.0/16 dev flannel.1 10.10.85.0/24 dev docker0 proto kernel scope link src 10.10.85.1 10.10.88.0/24 via 192.168.100.212 dev ens33 192.168.100.0/24 dev ens33 proto kernel scope link src 192.168.100.211 metric 100 192.168.122.0/24 dev virbr0 proto kernel scope link src 192.168.122.1 # 192.168.100.212 [root@localhost ~]# systemctl restart flanneld [root@localhost ~]# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.10.0.0/16 FLANNEL_SUBNET=10.10.88.1/24 FLANNEL_MTU=1500 FLANNEL_IPMASQ=false # 查看路由条目 [root@localhost ~]# ip r default via 192.168.100.2 dev ens33 proto static metric 100 10.10.0.0/16 dev flannel.1 10.10.85.0/24 via 192.168.100.211 dev ens33 10.10.88.0/24 dev docker0 proto kernel scope link src 10.10.88.1 192.168.100.0/24 dev ens33 proto kernel scope link src 192.168.100.212 metric 100 192.168.122.0/24 dev virbr0 proto kernel scope link src 192.168.122.1多出了集群中非本机容器的ip子网网段,并且由集群中该容器的物理机的地址去转发

10.10.88.0/24 via 192.168.100.212 dev ens33

10.10.85.0/24 via 192.168.100.211 dev ens33

也就是说两个主机之间的容器要进行通信,现在由各自主机的ens33来转发

修改docker启动项中的MTU值(两台)

[root@localhost ~]# vim /usr/lib/systemd/system/docker.service # 修改--mtu=1500即可 # 重启docker [root@localhost ~]# systemctl daemon-reload [root@localhost ~]# systemctl restart docker

- 测试连通

# 192.168.100.211 [root@localhost ~]# docker run -itd --name bbox4 busybox e0347c838d53f724470377e2d51aedf2b0d2ec1826bb53d7afb1946ccf3ffe58 #192.168.100.212 [root@localhost ~]# docker run -itd --name bbox3 busybox bf0c3587d2e7ecf58605115259a835c7f6df08285eff2ac70b8d62b42c2debf0 [root@localhost ~]# docker exec -it bbox3 sh / # ping 10.10.85.2 PING 10.10.85.2 (10.10.85.2): 56 data bytes 64 bytes from 10.10.85.2: seq=0 ttl=62 time=0.428 ms 64 bytes from 10.10.85.2: seq=1 ttl=62 time=0.460 ms / # traceroute 10.10.85.2 traceroute to 10.10.85.2 (10.10.85.2), 30 hops max, 46 byte packets 1 loaclhost (10.10.88.1) 0.010 ms 0.008 ms 0.005 ms 2 192.168.100.211 (192.168.100.211) 0.398 ms 0.440 ms 0.328 ms 3 loaclhost (10.10.85.2) 0.204 ms 0.393 ms 1.410 ms # 这时的路由跟踪看出,两个容器是经过物理机的ens33的IP地址来转发的。

vxlan和host-gw的区别

host-gw把每个物理机都配置为容器的子网网关,每个物理机都知道各自内的子网和如何转发。

vxlan是在物理机之间建立vxlan隧道,不同的物理机的容器都在vxlan的一个大网中。

两种虽然都使用不同的机制建立容器间的通信,但是对于容器则不需要任何改变。

由于vxlan需要对数据进行额外封装,性能较差。而相反host-gw的性能就相对较好

默认的节点间数据通信方式是UDP转发; flannel默认使用8285端口作为UDP封装报文的端口,host gw使用8472端口

本博客所有文章是以学习为目的,如果有不对的地方可以一起交流沟通共同学习 邮箱:1248287831@qq.com!